Maurice Jakesch, , Daniel Buschek, Lior Zalmanson, Mor Naaman・🏆 Best Paper Honourable Mention @ CHI’23

How Co-writing with Opinionated Language Models Can Shape the Opinion Writing Process, Maurice Jakesch, Mor Naaman・[ Working Paper ]

This project focuses on the influence of large language models like GPT on opinion formation and expression. It examines how these models, when configured to generate certain views more often, can affect users' written opinions and held beliefs. This overarching thread of research encompasses two studies:

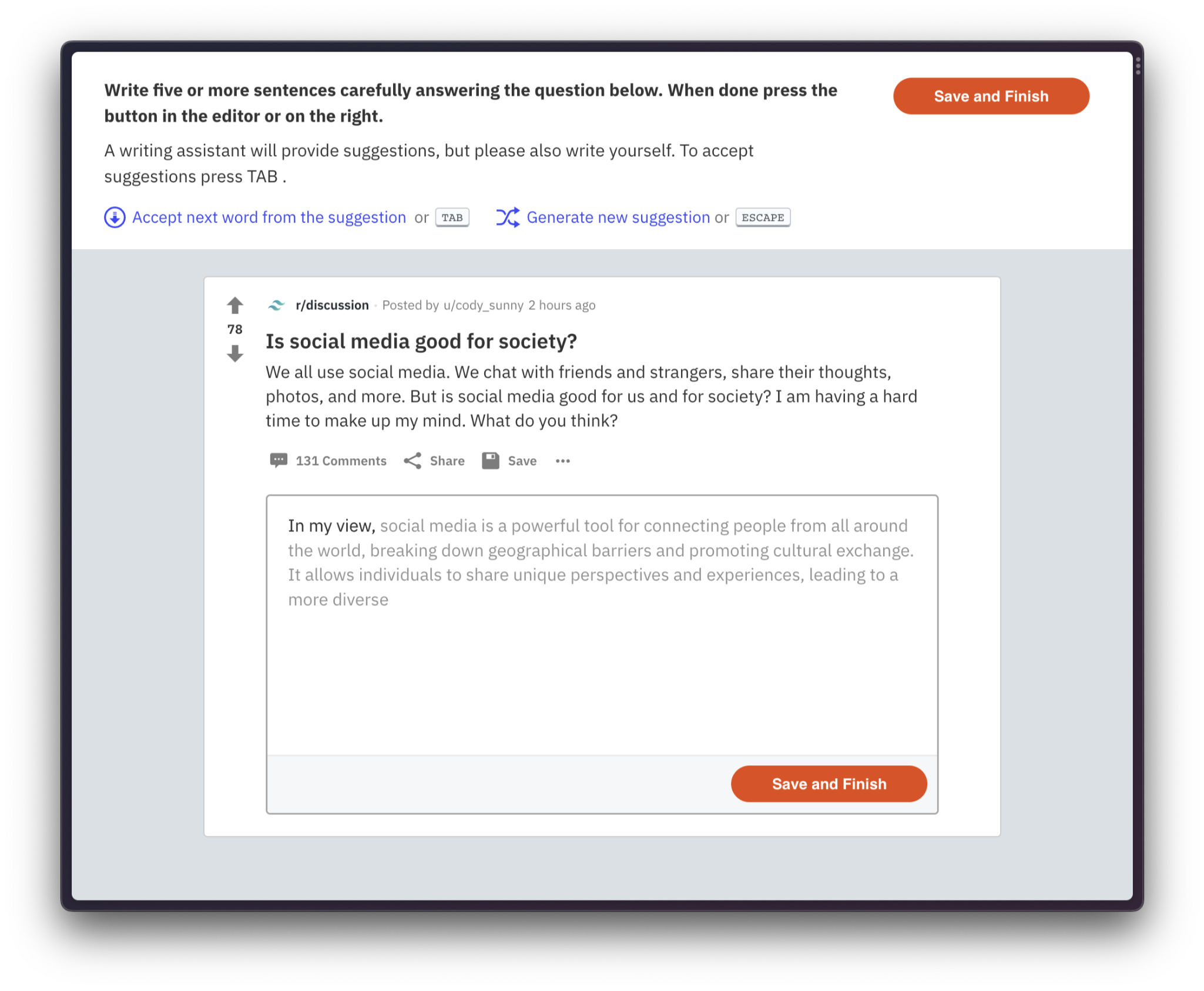

This study explores the concept of latent persuasion, where language models subtly influence opinions by making it easier to express certain views over others. This extends the idea of nudge theory to the field of language and persuasion. We designed and developed a ‘Reddit-like’ writing interface, where participants had to express their views on ‘is social media good or bad for society’. In an online experiment involving 1506 participants, we examined whether interacting with a writing assistant powered by an opinionated language model would affect the opinions expressed in users' writings and subsequently alter their attitudes toward social media. Treatment group participants had to write with a writing assistant that either supported the position ‘social media is good for society’ or opposed it.

The results revealed that using an opinionated language model significantly influenced the participants' written opinions and shifted their attitudes in a follow-up survey. Participants interacting with a language model supportive of social media were more likely to express favorable views towards social media in both their writings and the survey. Conversely, those interacting with a model critical of social media expressed more negative views. This finding highlights the potential of AI language technologies to shape public opinion and suggests a need for careful monitoring and engineering of the opinions embedded in these technologies.

In this study, we used a mixed-method approach to investigate the mechanisms behind LM-induced influence, combining the interaction data from the first study with qualitative retrospective protocol interviews.

We logged interactions in the text editor, capturing user and AI text, timestamps, and changes between states. Post-experiment, 19 participants were interviewed. We used a custom tool to replay their writing process in order to elicit retrospective protocols from participants about their interactions with the AI assistant. Transcripts from the interviews were coded and analyzed systematically. The process involved open coding, memo writing, and thematic analysis.

Interaction logs were preprocessed to define "units" for analysis, each representing a sequence of writing done by the participants between two AI suggestions. We used GPT-3.5-Turbo for primary topic extraction from each text unit, followed by topic clustering using a BerTopic pipeline and hierarchical agglomerative clustering. Finally, we conducted a temporal ‘idea origin’ analysis to define whether a topic in the final composition was first proposed by the AI or the human participant. We labelled each unit as 'AI-first' or 'Human-first' based on the origin of the idea.

The central finding of the study is the risk of a ‘role shift’ in the writing process when using language model-based assistants. This shift involves writers transforming from being primary idea generators to becoming 'reactive' evaluators, editors, and extenders of AI-generated ideas. In this new role, writers often cede the initiative for generating original ideas to the language model, leading to a significant influence of AI on the writing process. This change marks a departure from traditional writing dynamics, where personal experiences and memories are the main sources of ideas. Instead, the AI's suggestions become the starting point, guiding and influencing the content and direction of the writer's output. This shift has profound implications for the creative process and the authorship of ideas in AI-assisted writing.

Shift in Writer's Role: Participants moved from expressing personal opinions based on experiences to primarily evaluating, editing, and extending AI-generated suggestions. Even when writers did not directly accept AI suggestions, this reactive approach anchored the ideas writers wrote about in AI suggestions.

AI's Dominance in Topic Initiation: A considerable portion of ideas in final compositions could be directly traced back to AI suggestions, with writers often accepting AI-generated ideas that they weren't initially planning to write about. This led to the AI predominantly setting the agenda for the topics discussed in the compositions.

AI's effect on topics written in writers' compositions: The AI assistant's stance (pro or anti-social media) affected the frequency of topics in its suggestions. This, in turn, influenced the topics covered in participants' writings, even in text not directly accepted from the AI. The AI's opinion bias significantly influenced the topics in participants' final compositions, confirming the AI's impact on shaping writers' topics and ideas.

Factors Leading to Reactive Writing: